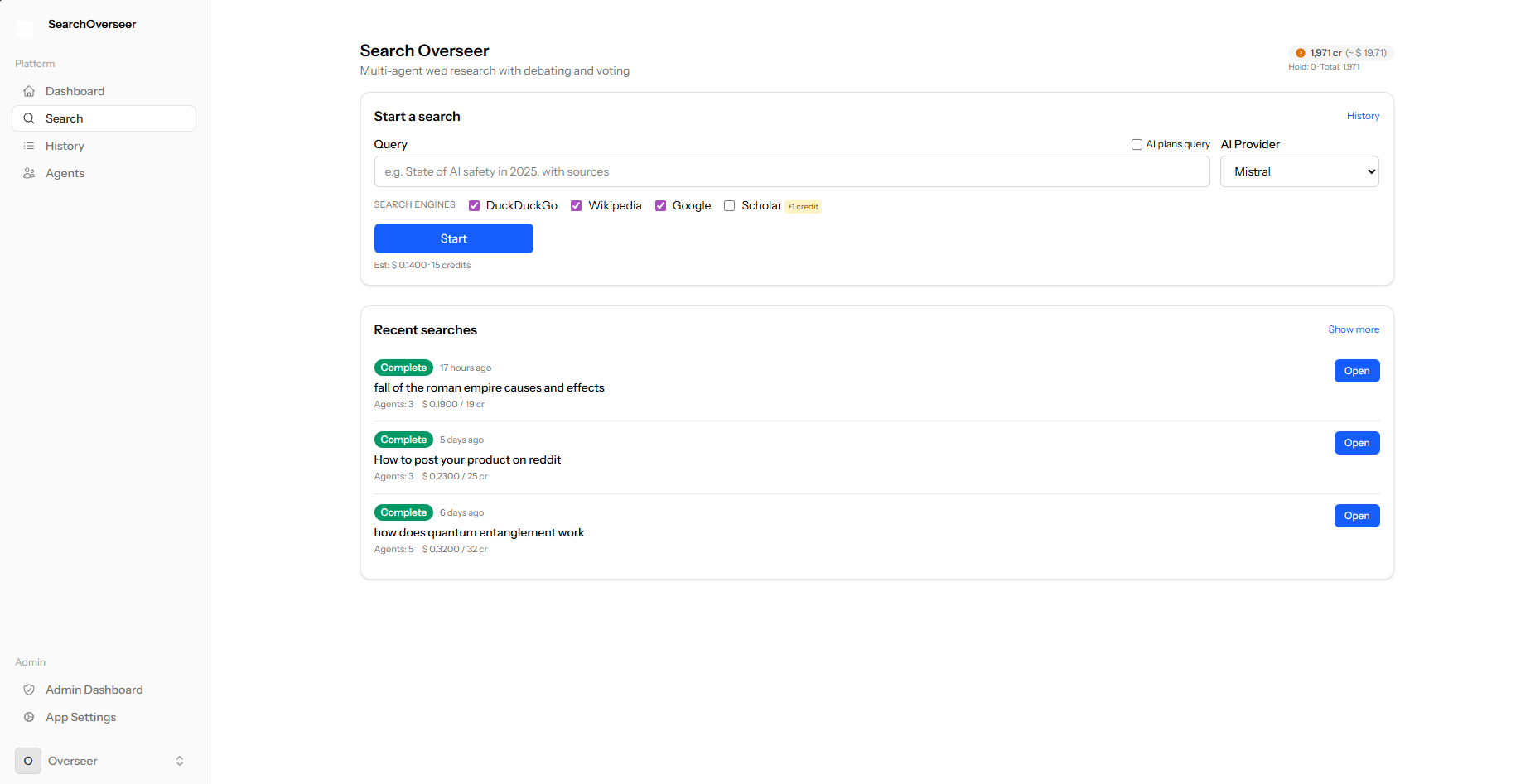

SearchOverseer

SearchOverseer

Accelerate Evidence‑Based Research & Analysis

Search Overseer orchestrates multiple specialized AI research agents that collect, critique, and synthesize from diverse, high‑quality sources. Designed for researchers, analysts, journalists, and policy teams who need transparent reasoning—not just an answer.

Source Triangulation

Blend academic, news, technical docs & open data; reduce single‑source bias.

Agent Critique Cycle

Independent agents challenge assumptions before synthesizing consensus.

Transparent Rationale

Every conclusion links to supporting snippets, citations & agent arguments.

Who It's For

Academic researchers, investigative journalists, policy analysts, competitive intelligence teams, and anyone validating claims under time pressure.

How It Works

Enter a query or investigative prompt. Specialized agents retrieve, score and summarize evidence. They debate conflicting viewpoints, converge on a position, and surface dissent where uncertainty remains.

Why Trust It

Instead of one opaque model output, you see structured reasoning steps, supporting excerpts, and confidence signals derived from source diversity and agreement metrics.

Privacy & Control

Your queries aren’t used to train public models. Logs can be anonymized or purged. Fine‑grained source inclusion lets you avoid low‑quality domains.

Roadmap

- • Custom agent scripting

- • Private corpus indexing

- • Exportable audit reports (PDF)

- • Long‑form literature review mode